Continuing from our previous article, “Summer, 3D point cloud data of grape branches and the use of AI,” we are working with JA In the spring of 2018, we started to improve the efficiency of the grape branch identification process and maximize field profits with the aim of We tell you about our research on improving the efficiency of grape cultivation. After all, autumn is the season of a grape. It might be a time when you often see the grape written with “Yamanashi-grown”.

These days, we hear a lot of distressing news about grape theft during the harvest season. It’s like. More than 100 bunches of expensive shine muscats have been stolen.

Therefore, those involved in farming, including patrolling the fields, are more likely than we think to The reality is that we have to get the job done. Recently, robots and sensors using the latest technology have been used to combat this kind of animal damage and theft. Experiments with IoT devices are being promoted in municipalities throughout Japan.

A view of a grape field in autumn

The harvesting of the grapes takes place over a period of about a month in late summer and autumn. The grapes are checked for ripeness one by one after they have been bagged. The grapes that are ripe and colorful are carefully harvested and shipped so that the grapes are not crushed or deformed. It will be distributed and available for purchase, even in stores where you normally see it.

Of course, there is no “harvest and no more”. After shipping, we always prepare the soil for the next year. The first step to make more delicious grapes is started by spreading fertilizer and compost, and nurturing the soil rich in nutrients.

Experience is an integral part of all farming, including grape growing. Many people who have been involved in farming for many years have always gotten the know-how to make their crops taste better from their five senses. The cycle of the four seasons is experienced repeatedly with the crops.

And we can start small and make improvements year after year, resulting in a better tasting crop than the previous year. We have a lot of experience in this field. We can reproduce or realize actions that are based on such experiences by using the latest technology. We have been engaged in research and development with the intention of making it possible to

This is partly due to the current situation where the number of farmers is aging and decreasing.

Repeated surveys and prototypes for improvement

We identify issues in the use of 3D point cloud data and AI deep learning, and re-examine the appropriateness of the camera and lens.

Also, the speed at which the selected drone’s autonomic software moves is appropriate, the altitude of the drone is determined to be the best position for the shot. You also have to think about things like whether you can produce data. In addition to the equipment and software to be used, there are many things to review in terms of usage and testing methods.

First of all, the current drone autonavigation software we use has a low altitude And we couldn’t capture high lap rate image data. When a drone is navigating, the safety system kicks in at an altitude of 15 meters, causing the drone to hover and blur.

These problems were brought to light, and we considered developing our own automated navigation software. However, there were some difficulties in terms of cost. For this reason, it was decided to use both manual navigation and conventional automatic navigation, and the altitude of the ship will be tested and adjusted to suit the needs of the pilot. After repeated research, we settled on the conclusion that 18 meters is the best option.

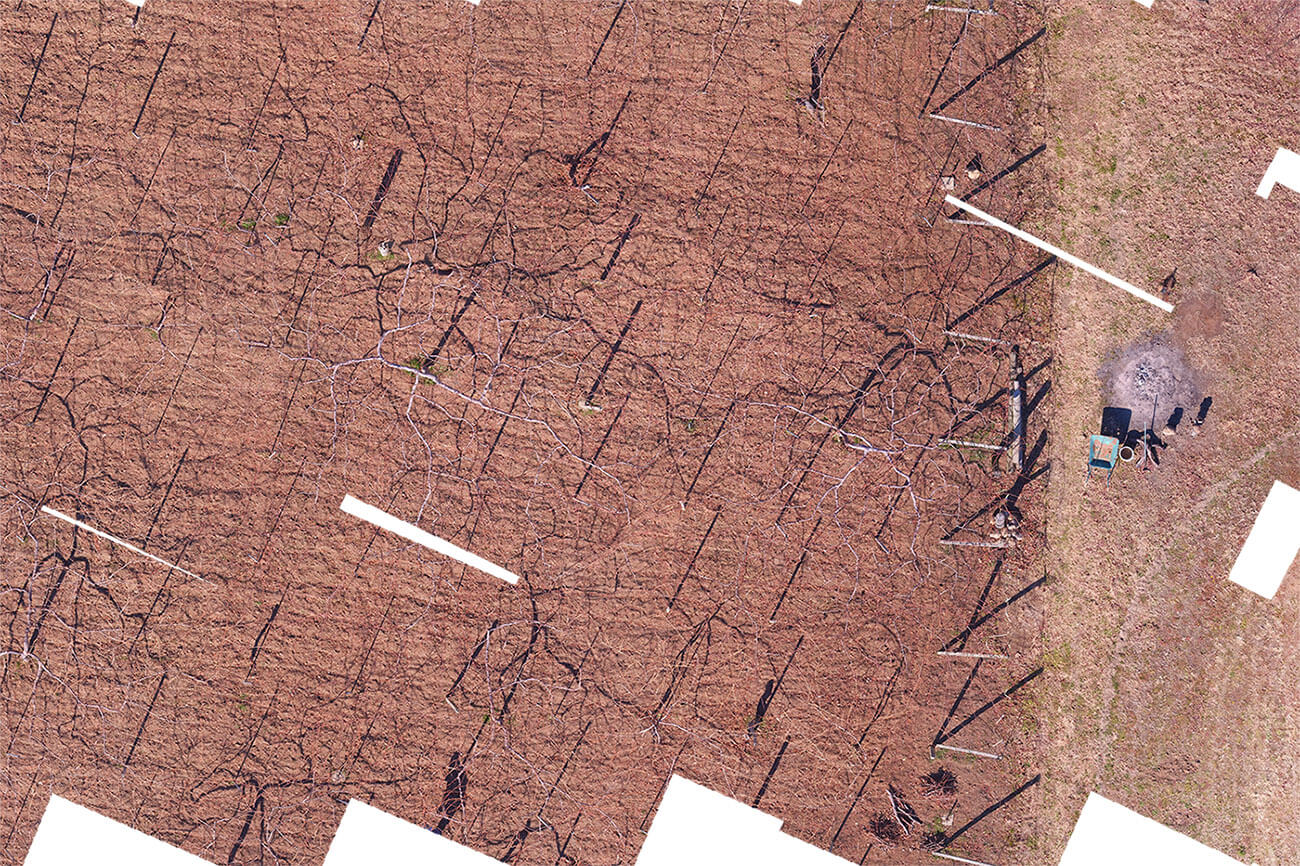

The following photos were taken by drone from that altitude and merged together manually in Photoshop.

There is an automatic photo-merge function, but I used this method for better accuracy.

Furthermore, based on the results of SfM (3D shape reconstruction using photos taken from multiple viewpoints) conducted on a cloud server, we considered processing the image data into a hemispherical surface and adding depth information from a compound eye camera to the image data, taking into account the limitations of the lap rate of a monocular camera.

Internally, there was even a suggestion to use a stereo camera that could quantify the distance of the subject, which was difficult to do with a monocular camera. In order to do this, we built a prototype camera that can adjust the shutter and focus. We tried our best to combine the generated image data, but it was not practical to make our own stereo camera. This time, we determined that it was not the case.

As for the branch identification method, we decided to conduct demonstration tests of two types of identification methods and to study them in light of the practicality of software development.

As you can see, there are times when the technology and cost are not sufficient for the research.

But if you look at it from the perspective of then and now, the iPhone 11 with its dual- and triple-lens compound eye cameras, Technological innovation and proliferation of devices such as the 11 pro and other smartphones are now available There is a growing number of methods that can be considered by It also means that what was impossible is now possible.

We will continue with the next and final article on “Winter, Pruning and Research Results”.

I would like to share with you the final chapter of our research and development, as well as a look at the fields where the grapes have been harvested and how they are moving into the next year.